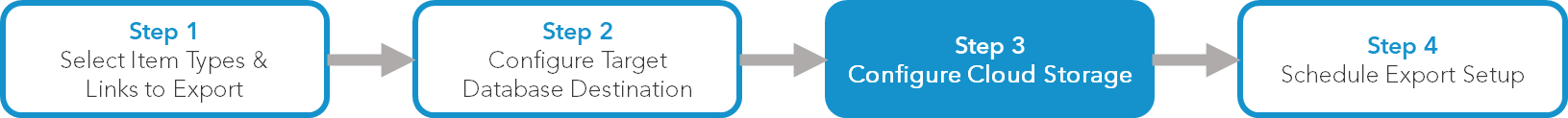

Step 3 - Configure Cloud Storage

S3 and Amazon Redshift

You can use Data Warehouse Export to extract data to S3 or Amazon Redshift

- Enter a bucket name where the data is to be stored.

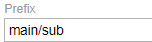

- Enter a prefix if you want to store files extracted from AdaptiveWork in a subfolder within the Bucket. Enter the main folder and the sub-folder in this format: main/sub

- Select the File Type.

- Select the region where the files will be uploaded to.

- Enter the AWS Access Key.

- Enter the AWS Secret Access Key.

- Click Test Cloud Storage Connection.

Note: AdaptiveWork recommends performing this test to ensure successful export to your selected cloud storage system. If the connection fails, make sure you’ve entered your account information correctly and try again.

File Limitation: 1000 rows

Box

You can use Data Warehouse Export to extract data to Box

- Click Associate with Box Account and log into Box.

- Optional - Use a prefix to store AdaptiveWork extracted files in a subfolder under the selected folder.

- Select the File Type.

File Limitation: 5000 rows

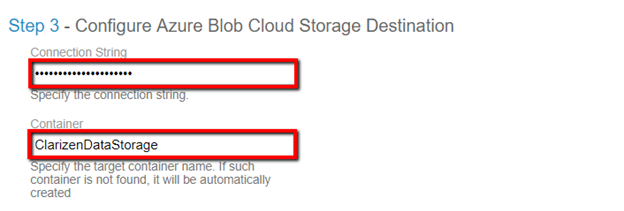

Azure Blob Storage

You can use Data Warehouse Export to extract data to Azure Blob Storage

Use a Connection String or Shared Access Signature (SAS) to connect to Azure.

-

Connection String:

-

Enter the Connection String you generated in Azure.

-

In the Container, specify the target container name (case sensitive). If the container is not found, it will be automatically created.

-

-

Shared Access Signature (SAS):

-

Specify the Shared Access Signature from Azure

-

Click Test Cloud Storage Connection

-

Select the File Type

-

Note: AdaptiveWork recommends performing this test to ensure successful export to your selected cloud storage system. If the connection fails, make sure you’ve entered your account information correctly and try again.

File Limitation: 1000 rows

SFTP Server

You can use Data Warehouse Export to extract data to an SFTP Server

- Access your Data Warehouse and follow the instructions for Step 1

- In Step 2, select SFTP Server in the Database Type field and complete the other settings:

- Host - specify the IP Address

- Port - set to 22 by default

- Username

- Password

- Complete fields in Step 3:

- Directory Name

- File Type

Important: A request for a Security / Firewall IP whitelisting exception must be submitted. Please contact your CSM or AE or submit a case and provide the SFTP Server IP Address details.

File Limitation: 1000 rows

Google Cloud Storage

You can use Data Warehouse Export to extract data to Google Cloud Storage

- Access your Data Warehouse and follow the instructions for Step 1

- In Step 2, select Google Cloud Storage in the Database Type field. All other fields are disabled.

- In Step 3, in the Google Cloud Storage Private Key field, enter the private key in JSON format that is used to access Google Cloud Storage

- In the Bucket Name field, enter the designated budget for this service.

- To store the extracted fields in a subfolder under the budget, enter a name for the subfolder in the Prefix field

File Limitation: 1000 rows